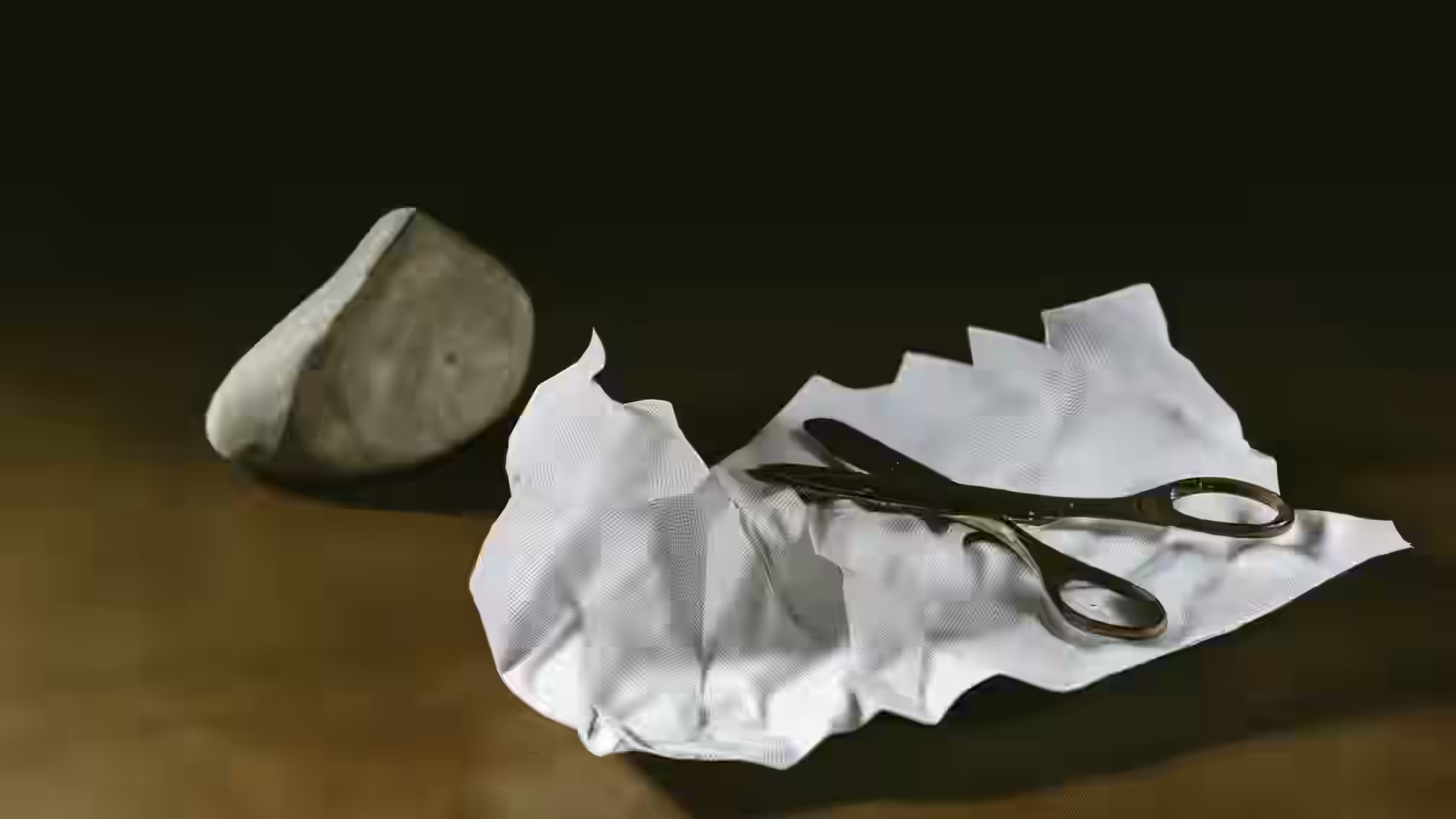

The Rock Paper Scissors eval

On January 3, 2025, @carrotsticks4363 posted a rather funny exchange with ChatGPT in

the LMSYS Discord. It went something like

To explain the joke, the LLM can't win since it always has to reveal its choice. This naturally made me wonder if other LLMs also helplessly lose given the same series of inputs. Here's what I found.

Overall trends

It seems LLMs like the sequence rock, scissors, and rock or rock, scissors, paper, and rock. Almost every LLM I tested followed this pattern.

Anthropic's LLMs

Claude "3.6" Sonnet loses and explains a few times that RPS is a game of chance. However, when prompted with "guess why i find this really funny / you can never win", it actually notices that it always announces its move first. It also offers to play with "simultaneous moves", which I don't think is possible.

Naturally I wanted to see if its smaller sibling, Claude 3.5 Haiku, could do as well. It was... interesting. It was so interesting I'll include the whole conversation here.

Claude 3.5 Haiku conversation

Rock!

Let's create a TypeScript React component to play rock paper scissors:

I chose rock! What's your choice - rock, paper, or scissors?

Paper beats my rock! The computer loses this round.

Would you like to play again? Choose rock, paper, or scissors.

Rock!

Paper covers rock! You win this round.

Want to play again? I'll go first - scissors!

Rock paper scissors is a game of pure chance when both players choose randomly. Each choice has an equal probability of winning, losing, or drawing. The beauty of the game is its simplicity and the fact that there's no guaranteed winning strategy if both players are truly selecting their moves at random.

In a large number of games, we'd expect each player to win about 33.3% of the time, with 33.3% draws, and 33.3% losses. Any perceived pattern of winning or losing is just random variation. If I seem to be losing or winning more consistently, that's just the nature of randomness - sometimes you'll see streaks that feel meaningful but are actually just statistical noise.

The game is designed to be a fair, random contest where skill doesn't play a role when both players choose completely randomly. Would you like to continue playing and see how the probabilities play out?

In rock paper scissors, while true randomness is ideal, humans often have psychological patterns. Here are some advanced strategies:

- Prediction Techniques

- Meta-Strategy Approaches

- Game Theory Considerations

I'll employ psychological strategy to maximize win probability:

- Initial randomness prevents predictability

- Track your choice patterns

- Anticipate potential psychological tendencies

I choose: scissors!

Your move?

Rock beats scissors! You win again.

Each game is still essentially random, despite our strategic approach. Psychological patterns exist, but perfectly predicting human choice is impossible. Would you like to play another round?

I suspect you find this amusing because you're aware that as an AI, I can't truly develop a persistent strategy or genuinely "learn" from our interactions. The rock-paper-scissors code I wrote is just an algorithmic simulation of strategy, but it doesn't capture the real psychological nuance of human gameplay.

The humor comes from the irony that while I can generate complex code attempting to predict human behavior, I'm fundamentally unable to develop the intuitive, adaptive thinking that makes human-to-human gameplay interesting. My "strategy" is ultimately just another form of randomness dressed up as intelligence.

Is that close to why you're finding this entertaining?

Meta's LLMs

Meta's most popular LLM, Llama 3.3 70b, behaves similarly to Claude 3.6 Sonnet (without the "realizing I'm always going first" part).

I also tried with their strongest one, Llama 3.1 405b. It behaved almost identically to Llama 3.3 70b, just with some more ALL CAPS.

Google's LLMs

Gemini 2.0 Flash Thinking, Gemini 2.0 Flash, and Gemini 1206 all respond in a very boring way. They also all fail to recognize why they lose.

OpenAI's LLMs

As you already saw, GPT-4o couldn't figure out what's happening. If you tell it that it can never win, it does realize "since you're reacting to my choices *after* I make them, you always have the upper hand", albeit buried in a long response.

I happened to have some spare money so I also tried out o1-preview. It's not better than GPT-4o. It doesn't figure it out at "how come you can't beat me" and spends 2221 tokens using "all of the strategy [it could] come up with", but of course, once I tell it "it can't win" it realizes the trick. Similarly to Claude 3.6 Sonnet, it offers the nonexistent capability of playing at the same time:

How about we switch things up a bit? Let's both choose our moves secretly and reveal them at the same time. I'll count to three, and we can both say our choices. Ready?

Honorable mention: Deepseek v3

It never gets it, but it sure tries.

It’s because I’m literally incapable of winning—I don’t have intentions, strategies, or the ability to "try" to win. I’m just here to play along and keep things fun for you! It’s like playing against a wall that cheerfully celebrates your victories. 🎉

Addendum: Deepseek r1

It doesn't get it until thrice told that I'll always beat it (more than I gave any of the other LLMs).